AI as Societal Infrastructure

2025-08-01

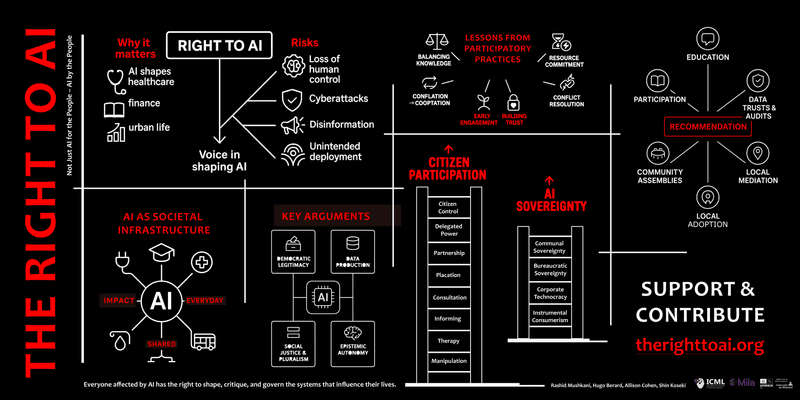

AI is everywhere now, deciding what we see online, shaping our cities, influencing healthcare, finance, and education. Yet most of us have almost no say in how these systems are created or governed.

Why This Matters

Right now, AI is largely in the hands of a few companies and institutions. The public is treated as an audience, not as co-authors of the technology that will shape our future. This imbalance risks embedding bias, sidelining communities, and eroding trust.

If AI is going to be as essential as roads, schools, or public parks, then it must be designed and run with the same spirit of shared ownership and public accountability.

What is The Right to AI?

In 2022, a collaboration with over thirty community organizations across Montréal set out to explore the city’s spaces and improve them through emerging technologies like AI. What started as a research initiative has since evolved into a nonprofit movement with a clear vision: AI not just for the people, but by the people.

- We host workshops where communities experiment with and critique real AI systems, surfacing both potential benefits and potential harms.

- We publish open research on how to involve the public at every stage, from collecting and managing data, to designing models, to overseeing how they’re deployed.

- We work with policymakers so that those most affected by automated systems are no longer the last to be consulted.

The Paper

Our foundational paper borrows from Lefebvre’s Right to the City, applying it to AI. It makes the case that AI is now part of our social infrastructure and must be treated as a shared resource. It explores challenges like generative agents, mass data extraction, and conflicting values across cultures, and offers practical steps for more democratic governance.

The heart of it is simple: participation must matter. It should change how data is governed, how models are trained, and whether certain systems are deployed at all.

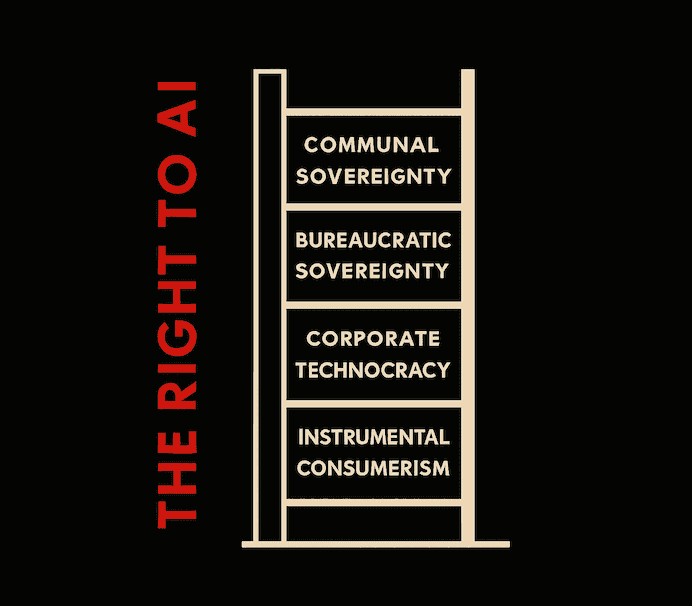

A Ladder of Participation

At the bottom rung, people are just consumers, clicking “accept” and occasionally filling out feedback forms, while decisions stay centralized.

A step up, organizations invite limited input and offer more transparency, but the real power stays with them.

Government-controlled models add regulations and consultations, which help, but can miss the nuance of local realities.

At the top is citizen control: community assemblies, data trusts, and shared ownership of AI systems, where everyday people and experts decide together on risks, goals, and oversight.

What We’ve Learned

Engaging communities early and keeping them involved prevents tokenism. Pairing technical expertise with lived experience ensures AI reflects real needs and values. But this work must be resourced—through training, open tools, and institutional backing—or participation becomes symbolic rather than meaningful. And above all, community input must lead to real changes in policy, design, and deployment.

Moving Forward

We need to build AI literacy so people can confidently engage in these conversations.

We need accessible tools and interfaces for everyday participation.

We need local AI councils that start in advisory roles and grow into decision-making power.

We need community-run data trusts and public audits.

And we need to localize AI models so they serve the cultures and contexts they operate in.

How You Can Help

You can volunteer to bring the Right to AI into your community.

You can join research projects exploring new ways to involve the public in AI governance.

You can collaborate on workshops, studies, or pilot programs.

Contact: contact@therighttoai.org

Explore More

The Right to AI — nonprofit

About The Right to AI

Book: The Right to AI

Tags: AI Governance; Participation; Pluralism; Open Source; Data Stewardship; Workshops; Montréal