MID-Space: Aligning Diverse Communities’ Needs to Inclusive Public Spaces

2024-12-15

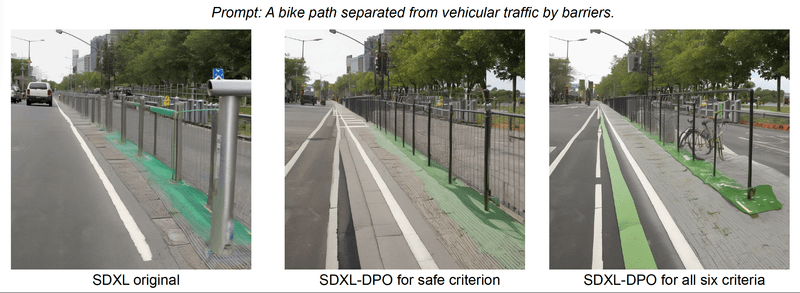

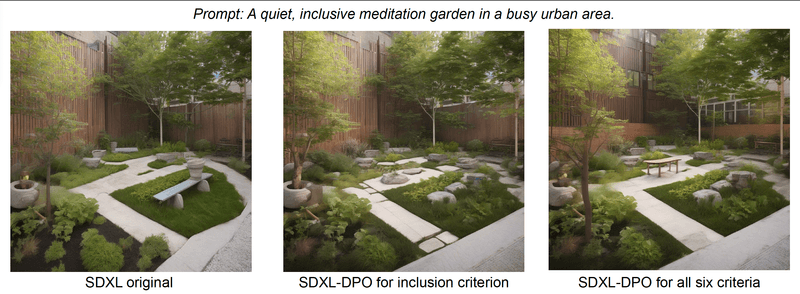

Example visualizations generated using Stable Diffusion XL, fine-tuned with the MID-Space dataset.

Project Team: Shravan Nayak, Rashid Mushkani, Hugo Berard, Allison Cohen, Shin Koseki, Hadrien Bertrand, Emmanuel Beaudry Marchand, Toumadher Ammar, Jerome Solis.

Project Overview

The MID-Space dataset bridges the gap between AI-generated visualizations and diverse community preferences in public space design. Created through participatory workshops and fine-tuned using Stable Diffusion XL, the dataset aligns AI outputs with six key criteria:

- Accessibility

- Safety

- Diversity

- Inclusivity

- Invitingness

- Comfort

This initiative empowers marginalized communities to actively shape urban design, promoting inclusive, equitable, and user-centered public spaces.

Dataset Features

- Textual Prompts: 3,350 prompts representing diverse public space typologies.

- AI-Generated Images: 13,465 visualizations created with Stable Diffusion XL.

- Annotations: Over 42,000 (in raw) and 35,000 distinct annotations evaluating preferences on a -1 to +1 scale for up to three criteria per image pair.

Data Collection Process

-

Community Workshops:

- Conducted three workshops with diverse Montreal communities to identify six alignment criteria.

- Generated 440 textual prompts, expanded to 2,910 synthetic prompts using GPT-4.

-

Image Generation:

- Stable Diffusion XL created 20 images per prompt, refined using CLIP similarity scoring.

-

Human Annotation:

- Sixteen annotators evaluated image pairs through an accessible web interface.

Visual Documentation

Applications

The MID-Space dataset is a valuable resource for:

- AI Alignment Research: Developing models that better reflect pluralistic human values.

- Urban Design: Crowd-sourcing input for inclusive public space design.

- Generative AI Tools: Enhancing text-to-image models for equity-focused visualization tasks.